Model Training Suite System Administrator Guide

System Administrator Guide

This guide provides instructions for installing and maintaining the training and production environments for Babel Street Model Training Suite.

The training section contains installation instructions for the complete Model Training Suite. Included components are Analytics Server, Adaptation Studio, Entity Training Server, and Event Training Server. Your installation may include one or both training servers.

The production section contains installation instructions for a production environment, as well as how to perform event and entity extraction. Included are instructions for moving trained models from the training environment into the production environment.

Install the training environment

The full training installation consists of the following components:

Analytics Server, including Entity Extractor

Adaptation Studio (RAS)

Entity Training Server (EnTS)

Event Training Server (EvTS)

An installation of Model Training Suite may include one or both of the training servers.

The components can be installed on separate machines or all together on a single machine. One machine is adequate for light loads and configuration testing. For production work, large projects, or multiple projects, we recommend installing on multiple machines.

For either install, you will need to know the fully qualified host name where a component is installed. The training servers can be installed on the same server. For the three machine install, you will need the three host names. For a single machine install, you only need the one name.

Important

For all Docker installations, localhost is not an acceptable name; the hostname must be addressable from within the Docker containers.

To find the host name for a machine, run the command hostname -f on the machine.

Docker compose configuration

When you extract the zip files, each server directory will contain the following 2 files for Docker:

docker-compose.yml.envTip

The

.envfile is a hidden file. All file names that start with a . are hidden. Typels -ato list the hidden files along with the other files in the directory.

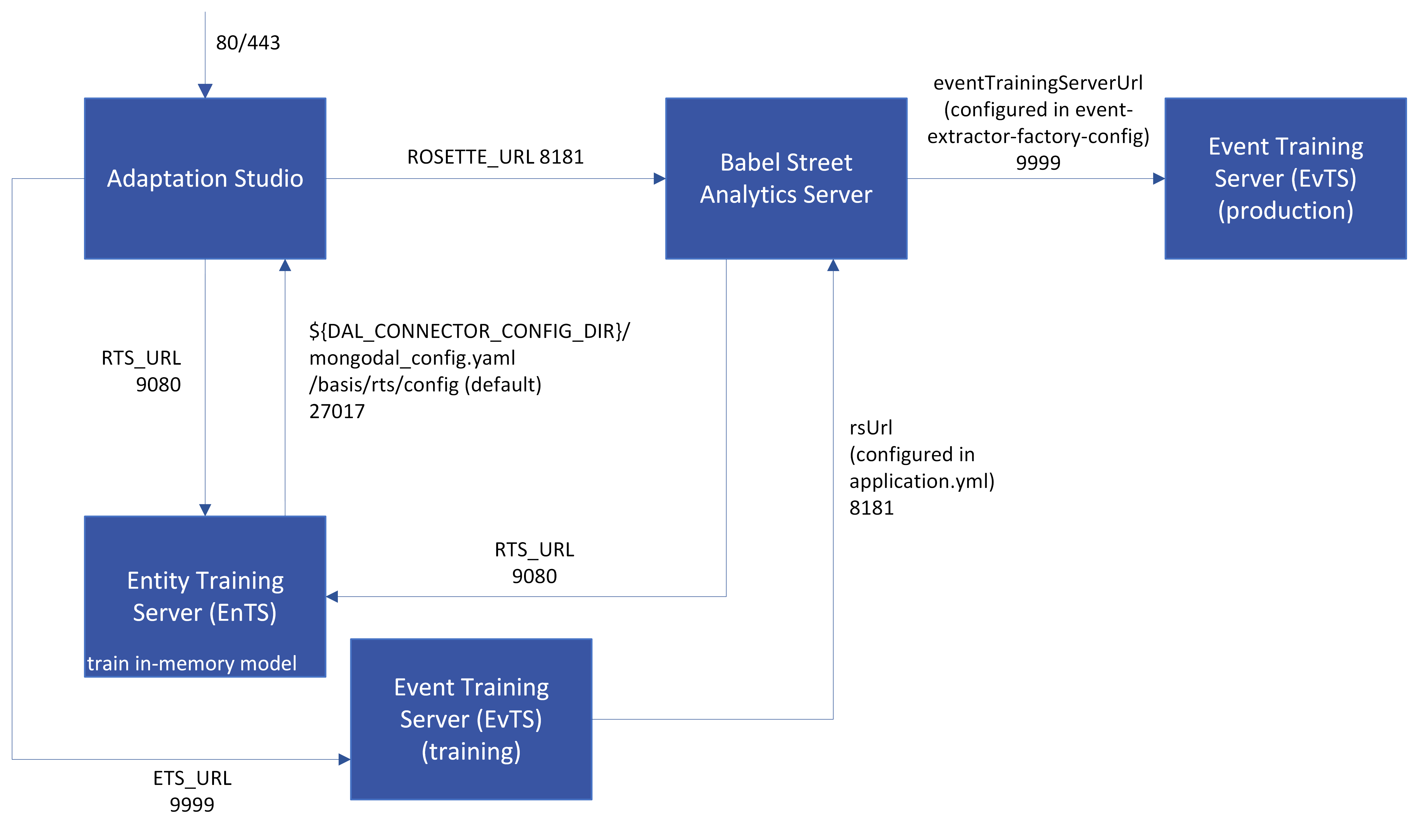

The directories used to connect the components, as shown in the figure below, are defined in the .env for each product. To view or change a value, edit the .env file, not the docker-compose.yml file.

In this diagram, the same instance of Analytics Server is shared by the training and production environments. All ports are configurable; the defaults are displayed.

Example .env file for RTS

RTS_PORT=9080 # Default /basis/rts/workspaces WORKSPACE_ROOT=/basis/rts/workspaces # Default /basis/rts # Wordclasses need to go into this directory ASSETS_ROOT=/basis/rts # Default /basis/rts/config # File is mongodal_config.yaml DAL_CONNECTOR_CONFIG_DIR=/basis/rts/config # The release script will update this variable. REX_TRAINING_SERVER_IMAGE=rex-training-server:0.4.2 # See https://www.ibm.com/support/knowledgecenter/SSD28V_liberty/com.ibm.websphere.wlp.core.doc/ae/twlp_admin_customvars.html # for details on the contents of this file. JVM_OPTIONS=/basis/rts/config/jvm.options # See https://www.ibm.com/support/knowledgecenter/SSEQTP_liberty/com.ibm.websphere.wlp.doc/ae/cwlp_config.html# for details on the contents of this file. SERVER_XML=/basis/rts/config/server.xml # Where to store RTS logs RTS_LOGS=/basis/rts/logs # The maximum number of training threads at any one time RTS_CONCURRENT_TRAIN_THREADS=2 # The maximum number of threads serializing models at any one time RTS_CONCURRENT_SERIALIZE_THREADS=1 # The maximum number of threads creating wordclasses at any one time RTS_CONCURRENT_WORDCLASS_THREADS=2

The variable values set in the .env file are used in the docker-compose.yml file:

version: '3'

services:

rex-training-server:

# https://docs.docker.com/compose/compose-file/#restart

# no, default, does not restart a container under any circumstance

# always, the container always restarts

# on-failure, restarts a container if the exit code indicates an on-failure error

# unless-stopped, always restarts a container, except when the container is stopped

# https://github.com/docker/compose/issues/3672 no must be in quotes

restart: "no"

image: ${REX_TRAINING_SERVER_IMAGE}

volumes:

- ${WORKSPACE_ROOT}:/basis/rts/workspaces

- ${ASSETS_ROOT}:/basis/rts

- ${REXJE_ROOT}:/basis/rts/root

# The file mongodal_config.yaml must exist in this directory

- ${DAL_CONNECTOR_CONFIG_DIR}:/basis/rts/config

- ${RTS_LOGS}:/logs# Optionally override JVM settings here, default -Xms8G -Xmx16G#

- ${JVM_OPTIONS}:/config/jvm.options

# Optionally override JVM settings here,default -Xms8G -Xmx16G

# - ${JVM_OPTIONS}:/config/jvm.options

# Optionally override Server settings here

# - ${SERVER_XML}:/config/server.xml

environment:

- AS_MONGO_DAL_CONNECTOR_CONFIG_DIR=/basis/rts/config

- rexje_root=/basis/rts/root

- RTS_CONCURRENT_TRAIN_THREADS=${RTS_CONCURRENT_TRAIN_THREADS}

- RTS_CONCURRENT_SERIALIZE_THREADS=${RTS_CONCURRENT_SERIALIZE_THREADS}

- RTS_CONCURRENT_WORDCLASS_THREADS=${RTS_CONCURRENT_WORDCLASS_THREADS}

ports:

- ${RTS_PORT}:9080Specifying Service Restart Policy

The service restart policy for each service can be specified in the docker-compose.yml files by specifying the restart parameter. This allows containers to be restarted on server reboot, Docker service restart, etc. Restart can be one of "no", always, on-failure, unless-stopped. The default is no if not specified.

Example for the Entity Training Server docker-compose.yml:

version: '3 '

services:

rex-training-server:

restart: "no"

...Prerequisites

These prerequisites are for the training environment.

Important

Recommended Operating System: 64 bit Linux or macOS.

Windows deployment (including Docker Desktop for Windows) is not tested or supported at this time. Windows users using Windows 10 pro or Windows Server 2016 or 2019 should run MTS in a Linux virtual machine under Hyper-V or VMWare Workstation.

Note

Chrome and Firefox are the supported browsers for Adaptation Studio.

Note

To import models into Adaptation Studio from the command line, the utility jq must be installed on your system.

You must install the files for Analytics Server, Entity Training Server, Event Training Server, and Adaptation Studio in different directories or on different computers. We recommend installing the Entity and Event training servers on the same machine.

The machines for Adaptation Studio, Entity Training Server, and Event Training Server must have Docker and docker compose installed.

Before installing any components, create the top-level directory for all components with proper permissions on each machine.

In this example, the install directory (<installDir>) is

/basis.sudo mkdir /basis sudo chmod 2777 /basis

Resource | Requirement |

|---|---|

CPU | 4 virtual CPU cores |

Memory | 32 GB |

Disk Space | 100 GB recommended for multiple small/medium projects. The actual amount required is determined by size and number of active projects. |

Resource | Requirement |

|---|---|

CPU | 4 virtual CPU cores |

Memory | 32 GB |

Disk Space | 500 GB recommended for multiple small/medium projects. The actual amount required is determined by the size and number of active projects. |

Resource | Requirement |

|---|---|

CPU | 4 virtual CPU cores |

Memory | 16 GB |

Disk Space | 500 GB recommended for multiple small/medium projects. The actual amount required is determined by the size and number of active projects. |

Resource | Requirement |

|---|---|

CPU | GPU recommended (Nvidia G4dn or equivalent, 16 GB memory) 4 virtual CPU cores |

Memory | 16 GB |

Disk Space | 125 GB SSD |

Single System Installation Prerequisites

On a single system, the following disk space is required for installation only. More space is needed to run the system. These numbers assume all components are installed.

Installers (all uncompressed files): 91 GB

Installed (everything in the default

/basisdirectory): 125 GB

Tip

If you choose auto-partitioning when installing the operating system, you may need to override the default install to ensure that /root gets enough space. For example, some Linux installs default to 70 GB for /root, which is not enough to install the entire system in /basis.

Shipment

The training shipment contains the following files:

rs-installation-<version>.zip: Files for Analytics Server. The size of the file is dependent on the number of languages included. This file may be shipped separately.

ets-installation-<version>.zip: Files for Event Training Server.

rts-installation-<version>.zip: Files for Entity Training Server.

coref-installation-<version>.zip: Files for in-document coreference.

Files for Adaptation Studio. The file in the shipment will be one of the following, depending on the configuration shipment.

ras-ets-<version>.zip: Files for Adaptation Studio for event model training.

ras-rts-<version>.zip: Files for Adaptation Studio for entity model training.

ras-ets-rts-<version>.zip: Files for Adaptation Studio for event and entity model training.

model-training-suite-documentation-<version>.zip: Documentation files.

System_Administrator_Guide-en.pdf: This guide.

Developing_Models-en.pdf: A guide for system architects and model administrators to aid in defining the modeling strategy and understanding the theory of model training.

Adaptation_Studio_User_Guide-en.pdf: A guide for the managers and annotators using Adaptation Studio

MTS-release-notes-<version>.pdf: Release notes.

eventTest.etsmodel: Sample Event Training Server project.

Adaptation_Studio_Events_tutorial_1_0_x.zip: A complete tutorial for events, including sample documents

You will need the license file during installation. The license file may be shipped separately.

rosette-license.xml: License key file. During the installation of Analytics Server you will be prompted for the location of this file.

Installation log files

A log file is created as each server is installed. All install questions, responses, are logged, along with all actions taken to install the server. Actions during enable and disable SSL are also logged. The files are created in the install directory with the name:

install-<scriptname>.sh.<date>_<time>.log

where scriptname is rs, rts, ets, or ras:

rs: Analytics Server

rts: Entity Server

ets: Event Server

ras: Adaptation Studio Server

For example, an installation of Analytics Server (rs) installed on 10/12/21 at 7:59 am would create the file:

install-rs.sh.10-12-21_07-59.log

Install Analytics Server

Tip

It is recommended that Analytics Server is installed stand-alone. However, Model Training Suite can support a containerized version.

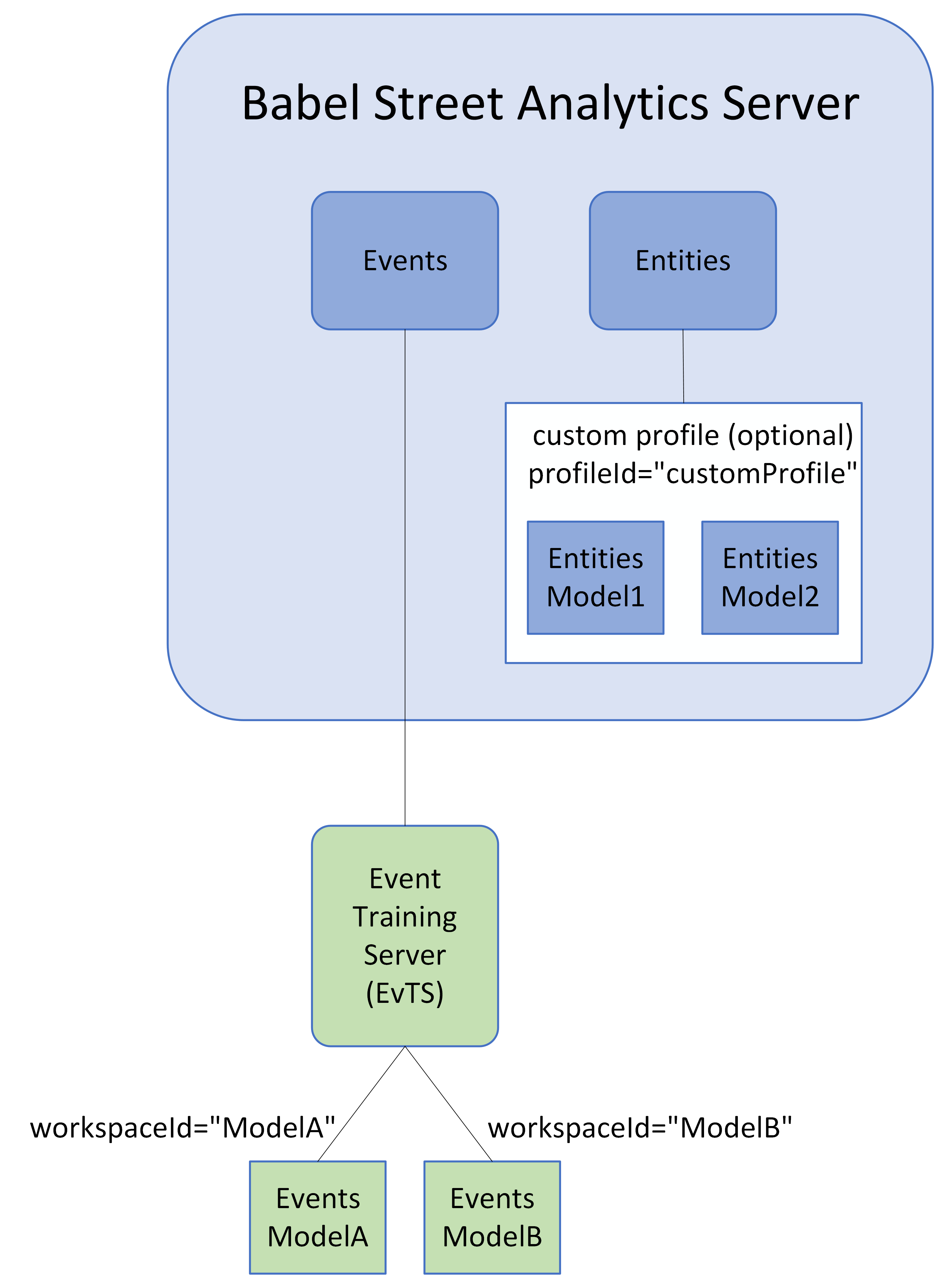

Both Entity Training Server (EnTS or RTS) and Event Training Server (EvTS or ETS) require specific Analytics Server configurations and custom profiles.

The headless and interactive installers can update Analytics Server to support Entity and Event Training Servers as required.

In the interactive installer, answer y to the following questions to update Analytics Server:

Should Analytics Server be configured with RTS support (y/n)?

Should Analytics Server be configured with ETS support (y/n)?

In the headless installer, modify the install-rs-headless.properties file to set up the proper support.

If you answer n or if you have an existing installation of Analytics Server, you can manually run the scripts to update Analytics Server after installation.

The following sections include instructions for installing stand-alone or as a docker container.

Analytics Server (RS) Headless Installer

The headless installer installs Analytics Server with Docker and without human interaction. Instead of user prompts, the installer parameters are taken from the properties file.

The installer prompts are defined in the file install-rs-headless.properties:

Start the installation:

./install-rs-headless.sh

The properties file is in the same directory as the script.

Use the --dry-run flag to validate the properties file, print the settings, and exit without changing anything.

Installing with Docker

Tip

Analytics Server can be configured and run with the roots hosted on an NFS server. An example Helm deployment can be found at https://github.com/rosette-api/helm.

Docker requirements

You must have a recent version of Docker Engine installed

Docker disk image size must be increased to 120GB (from the default of 60GB) to install the full Server package.

If installing on Windows, Docker for Windows must be installed (not Docker Toolbox or Docker Machine) with Hyper-V enabled.

Memory requirements

The Docker memory must be set to at least 16 GB if all endpoints are licensed and activated, and may require more depending on your application.

At a minimum, the Docker maximum memory should be the same or more than the Server JVM heap size. Otherwise, when running in a Docker container Analytics Server may get SIGKILL when the JVM asks for more memory the Docker allocates.

Update Analytics Server

Both the headless and interactive installers will update Analytics Server as required. If you have an existing installation of Analytics Server, or you didn't choose to update it on install, you can manually run the scripts to update Analytics Server.

If you are training named entity models, Update Analytics Server for Entity Training Server.

If you are training event models, Update Analytics Server for Event Training Server.

Update Analytics Server for Entity Training Server

If you are using a standalone (non-Docker) version of Analytics Server, copy the file

./scripts/update-rs-for-rts.shto the Analytics Server machine or directory.Run the script from the Analytics Server directory.

./basis/rts/update-rs-for-rts.sh

The script modifies the Analytics Server installation to install custom profiles and update environment variables.

Custom profiles are enabled if they are not already enabled. You will be prompted for where the custom profiles should be stored. The default location is

/basis/rosette/server/launcher/config/custom-profiles.If custom profiles are already enabled, the

ad-suggestionsandad-base-linguisticsprofiles are copied out to the custom profile subdirectory.If the

ad-suggestionsandad-base-linguisticsprofiles are already installed, they are overwritten.The

wrapper.conffile of Analytics Server is updated to include the following environment variables. If the file already has the variables defined, they are overwritten.set.RTS_URL=http://localhost:9080 set.RTSSOURCE=statistical

Each time the update script is run, a log file with a time stamp is created. Example:

update-rs-for-rts.sh.01-04-22_13-22.All modified files are backed up to the directory where they were changed, with a timestamp.

The script will prompt you for the following information:

Prompt | Purpose | Options | Notes |

|---|---|---|---|

Update Analytics Server for REX Training Server? | The Entity Training Server requires special configuration files. | Y to continue N to cancel | |

Fully qualified host name where REX Training Server is installed | The suggested value will be the host name of your current machine |

| |

Enter the port REX Training Server is listening on | Default: 9080 | ||

Enter the location of Analytics Server installation | Default:

| ||

Enter the directory to store custom profiles | Custom profiles can be in any directory | Default:

|

If the custom profiles are not installed correctly, you will receive a RosetteException from Adaptation Studio. Example:

ras_server_1 | raise RosetteException(code, message, url) ras_server_1 | rosette.api.RosetteException: unauthorizedProfile: Access to profile 'ad-suggestions' not granted: ras_server_1 | http://ras_proxy:42/rs/rest/v1/entities

Verify the updates

Once you have run the update script for Analytics Server, verify the install.

Start Analytics Server, if it's not already running.

Verify the custom profiles were deployed through the

custom-profilesendpoint:curl http://<analytics-host>:<port>/rest/v1/custom-profiles

or, in a browser open:

http://<analytics-host>:<port>/rest/v1/custom-profiles

At a minimum, the following two profiles should be returned by the endpoint:

[ad-base-linguistics,ad-suggestions]

If your installation has other custom profiles installed, they will also be returned.

Verify the Entity Training Server configuration.

Start Entity Training Server.

Call the

entitiesendpoint using the profileIdad-suggestionsand an existing Entity Training Server workspace.curl --location --request POST 'http://<analytics-host>:<port>/rest/v1/entities'/ --header 'Content-Type: application/json' --data-raw / '{ "content": "The Securities and Exchange Commission today announced the leadership of the / agency'\''s trial unit.",/ "profileId":"ad-suggestions", "options": {"rtsDecoder": "6224dd36897e684a81935558"}}'If the value for

rtsDecoderis a valid Entity Training Server workspace, a HTTP 200 should be returned.If this is a new install, and there are no Entity Training Server workspaces with the provided string, a HTTP 404 response should be returned. Any other value indicates a misconfiguration.

Update Analytics Server for Event Training Server

The update can be run interactively or with a headless installer.

Copy the file

/basis/ets/scripts/update-rs-for-ets.shto the Analytics Server machine or directory.Run the script from the Analytics Server directory.

./update-rs-for-ets.sh

Update for legacy schemas

The update script updates Analytics Server to support legacy events schemas that used the TIME and MONEY entity types, instead of the current entity types of TEMPORAL:TIME and IDENTIFIER:MONEY. To apply these updates, copy the file EntityTypePatcher.jar along with the update-rs-for-ets.sh script to the Analytics Server machine or directory.

Note

If the legacy schema patch is to be applied, the machine running the patch must have Java installed (minimum Java 8).

The update script will back up all changed files to the directory <current working directory>/regex-backup-<timestamp>. To roll back the changes, copy the files back to the Entity Extractor root directory.

The script will prompt you for the following information:

Prompt | Purpose | Options | Notes |

|---|---|---|---|

Should Analytics Server be updated to communicate with Events Training Server? | Configure Analytics Server with the events extraction configuration | N for the training server Y for the production server | |

Should the entity types be updated to support legacy schemata (create aliases for TIME and MONEY)? | Support legacy entity types | Y to update N to not update | |

Enter Location of Analytics Server configuration | This directory will be mounted as a volume. | Default:

| The configuration file to customize Analytics Server. |

Analytics Server memory management

There is not a single one size fits all number here. The best value for max heap size depends on a number of factors:

activated endpoints and features

usage pattern

data characteristics such as size (both character and token lengths), language, and genre

java garbage collector and its settings

Please note that it’s not recommended setting the max heap to the amount of physical RAM in the system. More heap doesn’t always translate to better performance, especially depending on your garbage collection settings.

Analytics Server’s data files are loaded into virtual memory. Some endpoints, such as /entities, involve a large amount of data. In order for Analytics Server to operate at its peak performance, we recommend that you reserve enough free memory to allow memory mapping of all our data files so that page misses are minimized at runtime.

To modify the JVM heap for standalone, edit the file server/conf/wrapper.conf and modify the value of wrapper.java.maxmemory.

# Maximum JVM heap in GB ROSETTE_JVM_MAX_HEAP=32 # Minimum JVM heap in GB ROSETTE_JVM_MIN_HEAP=32

We also recommend increasing the worker threads to 4, as described in Configuring worker threads for HTTP transport.

Install Entity Training Server (EnTS or RTS)

Note

The Entity Training Server used to be called REX Training Server. It is still referred to as RTS in many of the scripts in MTS.

You must have Docker, dockercompose, and unzip installed.

The product can be installed interactively or with a headless installer.

To install interactively:

Unzip the file

rts-installation-<version>.zip.From the directory

rts-installation-<version>, run the installation script:./install-rts.sh

To run the headless version of the script:

./install-rts-headless.sh

The properties file is in the same directory as the script.

Use the

--dry-runflag to validate the properties file, print the settings, and exit without changing anything.

The Entity Training Server installer will prompt you for the following information.

Whenever prompted for a directory, after you enter it, you will have to confirm either:

If the directory does not exist, you'll be prompted to create it.

If the directory exists, you'll be prompted whether it can be overwritten.

Prompt | Purpose | Options | Notes |

|---|---|---|---|

Enter installation directory for REX Training Server | Installation directory for Entity Training Server files | Default:

| This is now the |

Enter installation directory for REX Training Server docker files | Directory where Entity Training Server docker compose files will be installed. | Default:

| The disk requirements for the docker compose files are minimal (< 1 MB). However, other parts of the install require greater disk space |

Load REX Training Server docker image ? | Load the Docker images so they are available on the local machine | Otherwise, load them to a Docker registry shared by all machines. | We recommend |

Enter REX Training Server port to listen on | Default: 9080 You will be prompted to use that port. | This port and hostname will be required when installing the other servers. | |

Enter REX Training Server assets root directory | Directory to Install wordclasses and corpora to ${RTSASSETS} | Default: | This directory holds files needed for training including static wordclass files. The wordclass files can be manually installed later but must exist prior to starting RTS. |

Install worldclasses and corpora to ${RTSASSETS} | Y/N | ||

Enter REX Training Server REX root directory | This directory will be ${RTSROOT} | Default:

| |

Install REX flinx root to ${RTSROOT} | |||

Enter DAL configuration directory | The DAL connects to the mongo database on the Adaptation Studio component to access samples. If the port 27017 is NOT exposed on the RAS server then the | ||

Enter fully qualified host name where Adaptation Studio is installed | ${HOST} for single host install | The suggested value will be the host name of your current machine | Cannot be empty, |

Use ${ACTIVE_MQ_HOST} for ActiveMQ push notifications(y/n)?"; then "Enter fully qualified host name where ActiveMQ is installed (${HOST} for single host install)" "${HOST}") | |||

Use ${ACTIVE_MQ_HOST} (y/n)?"; then "Enter ActiveMQ port " 61616) | |||

Enter fully qualified host name where Adaptation Studio (RAS) is installed (${HOST} for single host install)" "${HOST}")" | |||

Enter location of REX Training Server Logs | Default: | ||

Enter REX Training Server workspaces root directory | This directory will be mounted as a volume. | Default: |

Entity Training Server memory management

The number of models that can be simultaneously trained depends on the size of the models and the memory available.

Once the model is written to disk, it consumes relatively little memory (~2 GB) for runtime requirements. The training and writing phases are much more memory intensive, each consuming approximately three times more memory. Typically, a model actively training will require approximately 10 GB of RAM.

Total memory consumption depends on the number of models being trained simultaneously, as well as the size of the models. The training server is a Java application and all operations use the JVM heap. To allow more simultaneous annotations on more projects, increase the RAM allocated to the JVM in Entity Training Server.

To modify the JVM heap:

Create a file

jvm.optionsin the/basis/rts/configdirectory. In this file, set the initial and maximum heap sizes. They should be set to the same value. The values must be less than the physical RAM installed on the machine.# Set the initial and minimum heap size to 16GB -Xms16G # Set the maximum heap size to 16GB -Xmx16G

Edit the file

/basis/rts/rts-docker/docker-compose.ymland uncomment the line${JVM_OPTIONS}:/config/jvm.options.# Optionally override JVM settings here, default -Xms8G -Xmx16G - ${JVM_OPTIONS}:/config/jvm.optionsEdit the file

/basis/rts/rts-docker/.envand setJVM_OPTIONSto point to thejvm.optionsfile.JVM_OPTIONS=/basis/rts/config/jvm.options

Install Event Training Server (EvTS or ETS)

The Event Training Server must be installed on both the training and the Analytics Server production instance (extraction). The same Event Training Server file is installed, either in training or extraction mode.

You must have Docker, dockercompose, and unzip installed.

The product can be installed interactively or with a headless installer.

To install interactively:

Unzip the file

ets-installation-<version>.zip.Start the installation:

./install-ets.sh

To run the headless install, use the

--headlessflag. The.propertiesfile is in the same directories as the installation script.Use the

--dry-runflag to validate the properties file, print the settings, and exit without changing anything.

The Event Training Server installer will prompt you for the following information:

Prompt | Purpose | Options | Notes |

|---|---|---|---|

ETS mode | Determine if installation is for training or extraction (production) mode | 1) Training 2) Extraction 3) Exit Installer | Sets the mode. Training mode prompts for location of Analytics Server; extraction mode does not. |

Installation directory | Installation directory for Event Training Server files | Default: If the directory does not exist, you'll be prompted to create it. If the directory exists, you'll be prompted whether it can be overwritten. | This is now the |

Port Event Training Server should listen on | Default: 9999 You will then have to confirm to use that port. | This port and hostname will be required when installing the other servers. | |

Directory for ETS workspaces | This directory will be mounted as a volume. | Default: If the directory does not exist, you'll be prompted to create it. If the directory exists, you'll be prompted whether it can be overwritten. | This directory holds the events models. |

Fully qualified host name where Analytics Server is installed | Not asked when installing in extraction mode (production server) | The suggested value will be the host name of your current machine. | Cannot be empty, |

Port Analytics Server is listening on | Not asked when installing in extraction mode (production server) | Default: 8181 | |

Full qualified name where ActiveMQ is installed | Active_MQ_Host | ||

Active MQ port | Default: 61616 |

Event Training Server configuration

Parameter | Note | Default | |||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Only needed in training mode. Users are prompted during install for the value if performing a Training mode installation. | None | |||||||||||||||||||||||||||||||||||||||||||||||

| Event Training Server is either in training or extraction mode | Training | |||||||||||||||||||||||||||||||||||||||||||||||

| The port Event Training Server will listen on. Users are prompted during install for the value | 9999 | |||||||||||||||||||||||||||||||||||||||||||||||

| The container image of the Event Training Server front end. | ||||||||||||||||||||||||||||||||||||||||||||||||

| The location of the |

| |||||||||||||||||||||||||||||||||||||||||||||||

| true if Event Training Server should use SSL when connecting to Analytics Server (and P-ETS workers if they are on remote hosts). False otherwise. Note, | false | |||||||||||||||||||||||||||||||||||||||||||||||

| The password of the JKS keystore file. | None | |||||||||||||||||||||||||||||||||||||||||||||||

| The location of the JKS keystore. | None | |||||||||||||||||||||||||||||||||||||||||||||||

| The password of the JKS truststore file. | None | |||||||||||||||||||||||||||||||||||||||||||||||

| The location of the JKS truststore. | None | |||||||||||||||||||||||||||||||||||||||||||||||

| Controls the granularity (verbosity) of the logging. Options include, ERROR, WARN, INFO, DEBUG, or TRACE. | INFO | |||||||||||||||||||||||||||||||||||||||||||||||

| The container image of the P-ETS worker | Release dependent | |||||||||||||||||||||||||||||||||||||||||||||||

| The location to store the Event Training Server models. |

| |||||||||||||||||||||||||||||||||||||||||||||||

| The container image of the nginx proxy in use. |

| |||||||||||||||||||||||||||||||||||||||||||||||

| The host certificate in PEM file format. Used to enable incoming SSL connections. | None | |||||||||||||||||||||||||||||||||||||||||||||||

| The host key in PEM file format. Used to enable incoming SSL connections. | None | |||||||||||||||||||||||||||||||||||||||||||||||

| The CA certificate in PEM file format. Used to enable incoming SSL connections. | None | |||||||||||||||||||||||||||||||||||||||||||||||

| The location of the nginx configuration file. Either |

| |||||||||||||||||||||||||||||||||||||||||||||||

| Configuration file for nginx when operating with SSL enabled. | ||||||||||||||||||||||||||||||||||||||||||||||||

| Configuration file for nginx when operating with SSL not enabled. | ||||||||||||||||||||||||||||||||||||||||||||||||

[a] Parameter updated by the | |||||||||||||||||||||||||||||||||||||||||||||||||

Event Training Server application.yml configuration file

The application.yml file controls the configuration of the Event Training Server application. The values in this file rarely change and are relative to the container, meaning the values are only used within the container and have no relevance to the machine running the container.

Server

This section contains the basic server setup. context-path is the part of the URL prepended to all Event Training Server URLs for example /ets/info. In the container, Event Training Server is listening on port 9999.

server:

servlet:

context-path: /ets

port: 9999Logging

This section contains the log setup. The default log level is info and can be changed by setting the ETS_LOGGING_LEVEL value in the .env file. The com.netflix.eureka.cluster is set to ERROR because by default it fills the log with unneeded log messages. The same is true for com.netflix.eureka.registry. If you would like to log everything, the two lines referencing com.netflix.* can be commented out with a #.

logging:

level:

ROOT: ${ETS_LOGGING_LEVEL:info}

com.netflix.eureka.cluster: ERROR

com.netflix.eureka.registry: WARNManagement

This section controls the management services, including health and metrics. This service can be run on a different port so the management services are not on the same interface as the Event Training Server API. Note: enabling this management port will require changes to the docker-compose.yml file to expose the port.

The health endpoint was customized to disable the display of disk space reporting, ping reporting and refresh information as it cluttered the response. In addition, the health endpoint is configured to always show details of the dependent services (P-ETS and in training mode, Analytics Server). To change the behavior and get a simple UP/DOWN response set show-details to never.

The following management endpoints are enabled: info, health, metrics, and prometheus. There are approximately 20 additional management endpoints that can be enabled.

Metrics is enabled to expose runtime metric information about the Event Training Server process, memory consumption, threads and CPU usage.

Prometheus is enabled so that Event Training Server can be used as a data source for monitoring applications such as Graphana.

Endpoint timing information is enabled and available using the /ets/prometheus endpoint

management:

# Management can be on a separate port

# server:

# port: 9888

health:

diskspace:

enabled: false

ping:

enabled: false

refresh:

enabled: false

endpoint:

health:

show-details: always

endpoints:

web:

base-path: /

exposure:

include: "prometheus, metrics, health, info"

metrics:

web:

server:

auto-time-requests: trueEureka

ETS_HOST is only used when Event Training Server is running remotely to PETS

eureka:

dashboard:

path: /eurekadashboard

enabled: false

instance:

appname: JETS

hostname: ${ETS_HOST:ets-server}

leaseRenewalIntervalInSeconds: 30

leaseExpirationDurationInSeconds: 120

status-page-url: /ets/info

health-check-url: /ets/health

server:

enableSelfPreservation: false

client:

healthcheck:

enabled: false

# As the server we don't want to register with ourselves

registerWithEureka: false

fetchRegistry: false

serviceUrl:

defaultZone:

http://${eureka.instance.hostname}:${server.port}/ets/eureka/Info

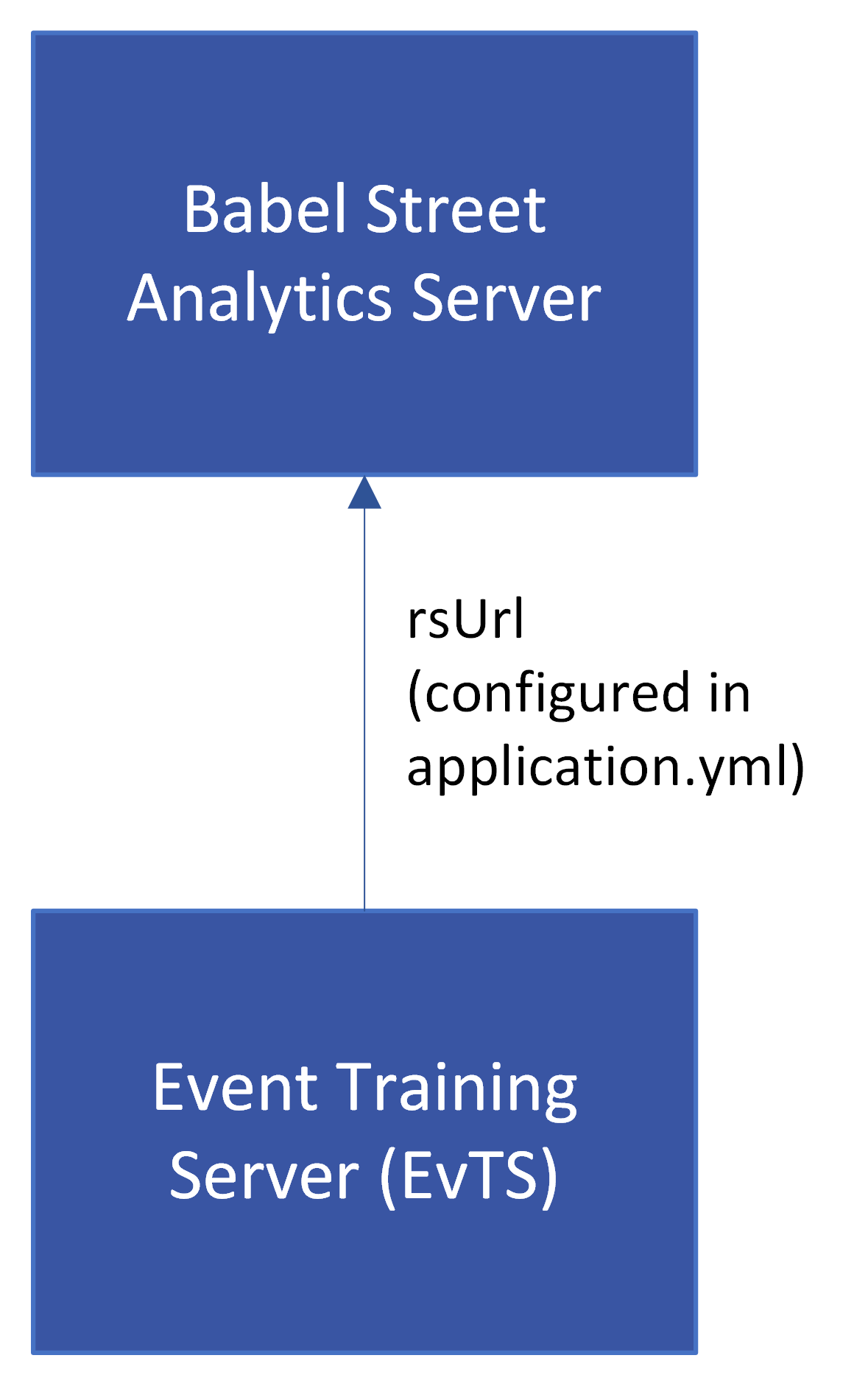

This section determines the Event Training Server operating mode (training or extraction). The ETS_TRUSTSTORE_FILENAME and ETS_KEYSTORE_FILENAME are only defined when running outside a container.

info:

app:

name: "events-training-server"

description: "Rosette Event Extraction and Training Server"

ets:

operating-mode: "${ETS_MODE:training}"

rs:

# rsUrl is only required in training configuration

rsUrl: ${RS_URL:}

rsConnectTimeoutMS:30000

ssl:

enable-outgoing-ssl: ${ENABLE_OUTGOING_SSL:false}

key-store: ${ETS_KEYSTORE_FILENAME:/certs/keystore.jks}

key-store-password: ${ETS_KEYSTORE_PW:}

trust-store: ${ETS_TRUSTSTORE_FILENAME:/certs/truststore.jks}

trust-store-password: ${ETS_TRUSTSTORE_PW:}

pets:

minimumVersion: v1.0.0

connectTimeoutMS: 60000

readTimeoutMS: 60000

writeBufferSizeKB: 1000Springdoc

springdoc:

show-actuator: true

# Enable/disable swagger documentation

api-docs:

enabled: true

spring:

banner:

location: classpath:ets-banner.txt

resources:

add-mappings: false

cloud:

discovery:

client:

composite-indicator:

enabled: false

health-indicator:

enabled: falseEnabling Event Training Server log files

This process describes how to capture the logs for the Event Training Server frontend process, (the J-ETS server). The backend worker (P-ETS) processes logs through the docker subsystem.

Configuring the Log Files

On the host machine, create a directory for the logs and set the permissions.

mkdir /basis/ets/logs chmod 777 /basis/ets/logs

The container must have sufficient permissions to write to the directory (uid = 1000, user = ets, group = ets).

Edit the file

/basis/ets/ets-docker/.env, adding a variable to set the logs directory.Add:

ETS_LOG_DIR=/basis/ets/logs

Edit the file

/basis/ets/ets-docker/docker-compose.ymlto mount the logs directory.In the

ets-server:section, add a new volume definition, using the new logs directory. The last line in the sample below is the added line.volumes: - ${ETS_CONFIG_FILE}:/application.yml - ${ETS_KEYSTORE_FILE}:/certs/keystore.jks - ${ETS_TRUSTSTORE_FILE}:/certs/truststore.jks - ${ETS_LOG_DIR}:/logsThis will create the

/logsdirectory in the container.Tell Event Training Server to use the

ETS_LOG_DIRby editing the file/basis/ets/config/application.ymland adding thefile: path: /logs statements.file: path: /logs level: ROOT: ${ETS_LOGGING_LEVEL:info} com.netflix.eureka.cluster: ERROR com.netflix.eureka.registry: WARNNote that the values in the

application.ymlfile refer to values in the container, not the host. The path specified inlogging.file.pathshould be/logsor whatever the volume was set to in thedocker-compose.ymlfile.

Log File Naming

The default log file name is spring.log. If you prefer a different name, you can change the log file name.

Edit the file

/basis/ets/config/application.ymland set the log file name by adding the name parameter and removing the path parameter from the logging section. If path and name are both present, path takes precedence and the default log file name will be used.logging: file: name: /logs/ets-server.log level: ROOT: ${ETS_LOGGING_LEVEL:info} com.netflix.eureka.cluster: ERROR com.netflix.eureka.registry: WARN

Log Rotation

By default, once logs reach 10 MB they are archived. That is, they are compressed with a date stamp and sequence number such as ets-server.log.2022-03-04.0.gz.The file size at which this occurs can be changed by setting the max-size in the file /basis/ets/config/application.yml.

logging:

file:

name: /logs/ets-server.log

max-size: 20 MBSupported values for the max-size include MB and KB.

Install indoc coref server

Within a document, there may be multiple references to a single entity. In-document coreference (indoc coref) chains together all mentions to an entity.

The indoc coref server is an additional server which may be installed alongside Analytics Server.

The response time will be slower when the request includes options such as

{"useIndocserver":true}. For this reason, we recommend using a GPU with indoc coref enabled.Indoc coref is supported for English only.

You must have Docker, dockercompose, and unzip installed.

The product can be installed interactively or with a headless installer.

To install interactively:

Unzip the file

coref-installation-<version>.zip.From the directory

coref-installation-<version>, run the installation script:./install-coref.sh

To run the headless version of the script:

./install-coref-headless.sh

The properties file is in the same directory as the script.

Use the

--dry-runflag to validate the properties file, print the settings, and exit without changing anything.

The Indoc Coref Server installer will prompt you for the following information.

Prompt | Purpose |

|---|---|

Install Coreference Server | Choose whether to install the indoc coref server. |

${Install Dir} does not exist, create (y/n)? | Create a new directory to install |

${Install Dir} exists, use anyway (files will be overwritten) (y/n)? | Overwrite the existing version. |

Load Coreference Server docker image (recommend Y) (y/n)? | |

Enter port Coreference Server should listen on (default 5000) | Default: 5000 |

Indoc Coref headless installer

The headless installer installs the indoc coref server without human interaction. Instead of user prompts, the installer parameters are taken from the properties file.

The installer prompts are defined in the file install-coref-headless.properties:

# The directory the Coreference server should be installed in # Default is /basis/coref install_dir=/basis/coref # Load the Coreference server docker image into the docker cache # Default is true load_image=true # The port the Coreference server should listen on # Default is 5000 coref_port=5000

Start the installation:

./install-coref.sh --headless

Install Adaptation Studio (RAS)

You must have Docker, dockercompose, and unzip installed.

Unzip the file

rosette-adaptation-studio-<version>.zip.From the directory

rosette-installation-<version>, run the installation script:Start the installation:

./install-ras.sh

To run the headless version of the script:

./install-ras-headless.sh

The properties file is in the same directory as the script.

Use the

--dry-runflag to validate the properties file, print the settings, and exit without changing anything.

Note

SSL for the front end browser, the connection from the web client to the Adaptation Studio server, can be enabled when RAS is installed. After installation of all three servers is complete, you can enable SSL between the servers.

To enable SSL for the front end browser, answer Yes to the question "Enable SSL for NGINX?". The certificate should already be on the server before beginning the installation.

Enabling front end SSL support is independent of enabling SSL between the servers.

The Adaptation Studio installer will prompt you for the following information:

Prompt | Purpose | Options | Notes |

|---|---|---|---|

Installation directory | Directory for docker compose files and helper scripts. | Default:

| The disk requirements for the docker compose files are minimal (< 1 MB). However, other parts of the install require greater disk space |

Enter location of Adaptation Studio logs | Directory for log files | Default: | |

Load the Adaptation Studio Docker image? | Load the Docker images so they are available on the local machine | Otherwise, load them to a Docker registry shared by all machines. | |

Enable SSL for NGINX? | To enable SSL for the connection from the web client to the RAS server | In a closed network this may not be required however, passwords from the client to server are initially sent using clear-text so it is recommended to enable SSL. | |

Target directory for SSL certificate files | Directory that will contain the SSL certificate files | Default: | For information on SSL certificate files:http://nginx.org/en/docs/http/ngx_http_ssl_module.html#ssl_certificate |

Location of the certificate key file | Where to find the certificate key file | The certificate must be in PEM format | |

Location of the certificate file | Where to find the certificate file | The certificate must be in PEM format | |

HTTPS Port to expose | Required if enabling SSL | Default: 443 | |

HTTP port to expose | Required if not enabling SSL | Default: 80 | |

Fully qualified host name where REX Training Server is installed | Used by Adaptation Studio to perform training for entity extraction | The Entity Training Server does not need to be installed before Adaptation Studio, but you must know where it will be installed. Cannot be empty, | |

Port REX Training Server is listening on |

| Default: 9080 |

|

Fully qualified host name where Events Training Server is installed | Used by Adaptation Studio to perform training for events extraction | The Event Server does not need to be installed before Adaptation Studio, but you must know where it will be installed. Cannot be empty, | |

Port Event Training Server is listed on | Default: 9999 | ||

Fully qualified host name where Analytics Server is installed | Used internally by Adaptation Studio |

| Analytics Server does not need to be installed before Adaptation Studio but Server should be installed and started before starting the studio. Liveliness checks will be performed on startup. Cannot be empty, |

Port Analytics Server is listening on | Default: 8181 | ||

Data directory for Adaptation Studio database | Directory where the Adaptation Studio data will be stored. | Default: | This can be an NFS mount. |

Directory for database backups | Directory where data should be stored when backed up from the RAS client. | Default: | This can be an NFS mount. |

Port to expose for the database | This port will be used by the Entity Training Server to connect to the Adaptation Studio database instance to retrieve samples. | Default: 27017 |

Verify system status

Model Training Suite has scripts on each server to monitor the health and status of the system. Run them at startup to verify the system is ready to go.

The scripts are:

/basis/rs/scripts/rs-healthcheck.sh/basis/rts/scripts/rts-healthcheck.sh/basis/ras/scripts/ras-healthcheck.sh

where /basis is the default installation directory. If you've installed in a different directory, replace /basis with your install directory.

Each script verifies that the Docker containers have loaded and all components are running.

To check the status of Event Training Server, open a browser and proceed to http:/{host}:{port}/ets/health. The default port is 9999.

The workers indicate that Event Training Server is available and Analytics Server can communicate with it:

{"status": "UP",

"components": {

"PETS-Workers": {

"status": "UP",

"details": {

"P-ETS Workers": "1 Worker(s) Available"

}

},

"RosetteServer": {

"status": "UP",

"details": {

"Analytics Server": "Available at http://memento:8181/rest/v1"

}

}

}

}Use the following links to verify the status of each of the servers:

Event Training Server health check: http://localhost:9999/ets/health

Entity Training Server Training Server endpoints health check: http://localhost:9080/health

Analytics Server: http://localhost:8181/rest/v1/info

Adaptation Studio: http://localhost/

Model Training Suite is shipped with a sample events model that can be used to verify the installation for events. Use the import_project.sh script to import the project.

Manage the training servers

Start the servers

Once installed, start the servers in the following order:

Run the Event Training Server (EvTS)

Navigate to the installation directory containing the Docker compose files. The default is:

/basis/ets/ets-docker

Run the service in detached mode.

docker compose up -d

To check that the service has started, check the logs.

docker compose logs -f

To check the status of Event Training Server, open a browser and proceed to

http:/{host}:{port}/ets/health. The default port is 9999.The workers indicate that Event Training Server is available and Analytics Server can communicate with it:

{"status": "UP", "components": { "PETS-Workers": { "status": "UP", "details": { "P-ETS Workers": "1 Worker(s) Available" } }, "RosetteServer": { "status": "UP", "details": { "Rosette Server": "Available at http://memento:8181/rest/v1" } } } }

Run the Entity Training Server (EnTS or RTS)

Navigate to the installation directory containing the Docker compose files. The default is:

/basis/rts/rts-docker

Run the service in detached mode.

docker compose up -d

To check that the service has started, check the logs. This will display the last few lines of the logs from the service.

tail /basis/rts/logs/messages.log

The service can be tested by using a browser and navigating to

http://<host>:<port>/health. The default port is 9080.Example:

http://localhost:9080/health

Run Analytics Server (RS)

To run on the console:

cd /basis/rosette/server/bin ./launch.sh console

To stop in the console:

CTRL-C

To run as a daemon:

./launch.sh start

To stop the daemon:

./launch.sh stop

To run the dockerized version of Analytics Server:

cd /basis/rs/rs-docker

If running on the console:

CTRL-CIf running as a daemon:

./launch.sh stop

To stop the dockerized version:

docker compose down

Note

Check the logs in /basis/rosette/server/logs/ to see any errors from startup or during the server run.

Note

If your installation includes the entity extraction component (rex-root), you may see failed to open ... warning messages for data files in languages not installed in your system. These can safely be ignored.

Tip

Now you can Try it out.

Run Adaptation Studio

Analytics Server and Entity Training Server must be running.

Navigate to the installation directory (default

/basis/ras).Execute the script

./scripts/start-standalone.sh. This script starts the services in detached mode and prints the logs. The message "Rosette Active Learning Studio Server <version> now up" will be displayed when the server is ready. You can then press CTRL-C to disconnect from the logs.Using a browser, navigate to

http(s)://<host>:<port>/to display the Adaptation Studio landing page.http://localhost:80

Verify system status

Model Training Suite has scripts on each server to monitor the health and status of the system. Run them at startup to verify the system is ready to go.

The scripts are:

/basis/rs/scripts/rs-healthcheck.sh/basis/rts/scripts/rts-healthcheck.sh/basis/ras/scripts/ras-healthcheck.sh

where /basis is the default installation directory. If you've installed in a different directory, replace /basis with your install directory.

Each script verifies that the Docker containers have loaded and all components are running.

To check the status of Event Training Server, open a browser and proceed to http:/{host}:{port}/ets/health. The default port is 9999.

The workers indicate that Event Training Server is available and Analytics Server can communicate with it:

{"status": "UP",

"components": {

"PETS-Workers": {

"status": "UP",

"details": {

"P-ETS Workers": "1 Worker(s) Available"

}

},

"RosetteServer": {

"status": "UP",

"details": {

"Analytics Server": "Available at http://memento:8181/rest/v1"

}

}

}

}Use the following links to verify the status of each of the servers:

Event Training Server health check: http://localhost:9999/ets/health

Entity Training Server Training Server endpoints health check: http://localhost:9080/health

Analytics Server: http://localhost:8181/rest/v1/info

Adaptation Studio: http://localhost/

Model Training Suite is shipped with a sample events model that can be used to verify the installation for events. Use the import_project.sh script to import the project.

Superuser password

Important

The initial superuser password for Adaptation Studio is set on install and must be changed on first login.

The superuser can create users and perform all tasks. At install, the superuser is set to admin.

The first time you log in as admin, you will be forced to change the password. The initial login information is:

Name: admin

Password: admin

The superuser password can be reset by running the reset_admin.sh in /basis/ras/scripts. After reset, you will be prompted to change the password when you log in the first time.

Note

If the superuser password contains the special characters $ or \, the password must be in quotes when sent to the reset_admin.sh script. Example:

./reset_admin.sh '2^$4bu\Qm$4C'

Headless installer

When using the headless installer, you can set the initial superuser password in the properties file.

To override the admin password, set the admin_password property to the plain text password to use in the install-ras-headless.properties file. On install, this password will be hashed and inserted into the authentication database of Adaptation Studio. Once installed the value can be deleted from the properties file.

You will not be prompted to change the password on the first login.

Shut down the servers

Once you've entered annotations and selected annotate, the data is saved in the system. If you bring down the servers while models are being trained, the models will be recreated and retrained upon restart.

Shut down the servers in the following order:

Adaptation Studio

Navigate to the scripts directory (default

/basis/ras/scripts/)stop-standalone.sh

Analytics Server

If running on the console:

CTRL-CIf running as a daemon:

./launch.sh stop

Entity Training Server

Navigate to the docker directory (default

/basis/rts/rts-docker/)docker compose down

Event Training Server

Navigate to the docker directory (default

/basis/ets/ets-docker/)docker compose down

Troubleshooting

These commands are executed in the directory with the docker compose files, e.g. /basis/rts/rts-docker.

Command | Purpose |

|---|---|

| Applies the variables from the |

| If you omit the |

| The |

| Attaches to the logs |

| Allows you to see the ENV variables the container is using. |

docker compose config

services:

rex-training-server:

environment:

AS_MONGO_DAL_CONNECTOR_CONFIG_DIR: /basis/rts/config

image: rex-training-server-tom-0.4.1:latest

ports:

- 9080:9080/tcp

volumes:

- /basis/0.8.final/rts/workspaces:/basis/rts/workspaces:rw

- /basis/0.8.final/rts/assets:/basis/rts:rw

- /basis/0.8.final/rts/config:/basis/rts/config:rw

- /basis/0.8.final/rts/logs:/logs:rw

- /basis/0.8.final/rts/rts-docker/jvm.options:/config/jvm.options:rw

version: '3.0'System log files

Each component generates logs which may be useful if a problem occurs.

The location of the logs is determined during installation. The files in the default locations are:

/basis/rs/logs/wrapper.logs/basis/rts/logs/messages.logs/basis/ras/logs/error.log(Nginx error log)/basis/ras/logs/access.log(Nginx access log)/basis/ras/logs/server.log

The Event Training Server (EvTS) leverages Docker log files, allowing you to customize log file location, rotation, log format (e.g. JSON), and if the logs are stored off the device. To display log information, from the Event Training Server install directory:

docker compose logs

or to follow the logs:

docker compose logs -f

See the Docker documentation for configuration and usage information.

Log files are also created during installation. Refer to these files for details on how each component was installed, as well as SSL configuration.

Analytics Server

/rest/metrics

The metrics endpoint provides info about the JVM. These values can be used with tools like ELK to graph the overall health of the container. For example, you can graph the percentage of memory used over time to see if you are running out of memory.

curl localhost:8181/rest/metrics

# HELP jvm_buffer_pool_used_bytes Used bytes of a given JVM buffer pool.

TYPE jvm_buffer_pool_used_bytes gauge

jvm_buffer_pool_used_bytes{pool="mapped",} 1.9689509698E10

jvm_buffer_pool_used_bytes{pool="direct",} 219885.0

jvm_buffer_pool_used_bytes{pool="mapped - 'non-volatile memory'",} 0.0

# HELP jvm_buffer_pool_capacity_bytes Bytes capacity of a given JVM buffer pool.

# TYPE jvm_buffer_pool_capacity_bytes gauge

jvm_buffer_pool_capacity_bytes{pool="mapped",} 1.968950405E10

jvm_buffer_pool_capacity_bytes{pool="direct",} 219885.0

jvm_buffer_pool_capacity_bytes{pool="mapped - 'non-volatile memory'",} 0.0

# HELP jvm_buffer_pool_used_buffers Used buffers of a given JVM buffer pool.

# TYPE jvm_buffer_pool_used_buffers gauge

jvm_buffer_pool_used_buffers{pool="mapped",} 58.0

jvm_buffer_pool_used_buffers{pool="direct",} 17.0```

Ping

Ping the server to test that Server is running and you can connect to it.

bash:

curl http://localhost:8181/rest/v1/ping

Windows Powershell:

Invoke-WebRequest -Uri http://localhost:8181/rest/v1/ping

Windows Command Prompt:

start "" http://localhost:8181/rest/v1/ping

This should return:

{"message":"Rosette at your service","time":1467912784915}

Query the version

bash:

curl http://localhost:8181/rest/v1/info

Windows Powershell:

Invoke-WebRequest -Uri http://localhost:8181/rest/v1/info

This should return:

{

"name": "Rosette",

"version": "1.28.0",

"buildNumber": "a8ea5010",

"buildTime": "20231218215507",

"licenseExpiration": "Perpetual"

}Entity Training Server → Adaptation Studio troubleshooting/monitoring

The /rts/info/server endpoint

$ curl "http://localhost:9080/rts/info/server"

returns the configuration properties along with the version:

{"assetRoot": "/basis/rts",

"concurrentSerializationThreads": 1,

"concurrentTrainThreads": 2,

"concurrentWordclassTrainingThreads": 2,

"memoryCacheTimeout": 180,

"serializationDelay": 300,

"version": "1.0.1",

"workspaceRoot": "/basis/rts/workspaces"}DAL Connection Test

The DAL connection test verifies the connection between Entity Training Server and the mongo instance on Adaptation Studio by connecting to EnTS directly. If Adaptation Studio is not reachable, this test could hang for 1-2 minutes waiting for the connection.

curl -v -X POST "http://localhost:9080/rts/rex/test-dal-connector" --header 'Content-Type: application/json' -d '{

"connectorId": "AS_MONGO",

"corpusType": "ANNOTATED_TRAIN_CORPUS",

"healthCheck" : "true",

"config": {

"projectId": "5f1470b6412ff29b8e4982f3",

"sampleIds": "5f158b0e412ff29b8e4983b8,5f158b0e412ff29b8e4983b5,5f158b0e412ff29b8e4983b7"

}

}'If it is working, it will return:

{"tests":[{"connectorId":"AS_MONGO","message":"Health check passed","success":true}]}This message indicates that the port is open and mongo is responding to requests.

The DAL connector is configured using the file /basis/rts/config/mongodal_config.yaml.

connectionString: 192.168.0.145:27017 maxSamples: 100000 useSsl: false user: root password: admin authenticationDB: admin

The connectionString points to the mongo instance in Adaptation Studio which is needed by the DAL connector in Entity Training Server. If the test is not successful, use cURL or a mongo client to test the connection to the mongo server without going through Entity Training Server.

curl 192.168.0.145:27017

When using cURL, a successful response will be a message from mongo similar to:

"It looks like you are trying to access MongoDB over HTTP on the native driver port."

This indicates the port is open and mongo is responding to requests.

Analytics Server → Entity Training Server troubleshooting/monitoring

Ping the server to test that the Entity Training Server is running and confirm that you can connect to it:

curl localhost:9080/rts/info/server

Verify the settings in the .env file in the rs-docker directory

$ cat .env

returns:

# Connector information RTS_URL=http://192.168.1.234:9080 RTS_SOURCE_STRING=statistical

Verify that the hostname/port are correct and reachable from the host. In the Analytics Server container, the following command should return 200.

curl $RTS_URL

Analytics Server → Event Training Server troubleshooting/monitoring

Ping the server to test that Event Training Server is running and confirm that you can connect to it:

curl -v http://<host>:<port>/rest/v1/events/info

If Analytics Server can't connect to Event Training Server, check the event-extractor-factory-config.yaml file in the rs/config/rosapi directory. Verify that the value of eventTrainingServerUrl is correct and not commented out.

Adaptation Studio

Model Training Suite uses mongo as the backend database to store projects. The mongo client is required to perform a health check of the backend.

For performance, db.enable.FreeMonitoring() can be enabled and will provide an external API with statistics. This is not recommended for use in production.

The Manage Project page in the Studio will display the status of Analytics Server and Entity Training Server.

SSL support

Note

SSL for the front end browser, the connection from the web client to the Adaptation Studio server, can be enabled when Adaptation Studio is installed. After installation of all servers is complete, you can enable SSL between the servers.

This section describes how to enable and disable SSL support between the servers.

Enabling front end SSL support is independent of enabling SSL between the servers.

SSL prerequisites

To run the provided scripts you need a certificate and a key generated for each host that is running a component. Each of the certificates must be signed by the root certificate authority (CA) that will be shared by all hosts running components. All certificates, keys, and root certificate authority files must be stored in PEM format.

Note

If all components are running on a single host, the same certificate and key PEM files can be shared by Adaptation Studio, Event Training Server, Entity Training Server, and Analytics Server.

Event Training Server, Entity Training Server, and Analytics Server are Java processes, so they require a keystore for the host and truststore for the root CA in a password protected Java Key Store (JKS) format in addition to PEM-format files. There is a script provided, generate-keystores.sh, in the Entity Training Server docker directory that will convert the certificate and key into a password protected JKS file. The JKS file must have a .jks file extension. Additionally, this script will generate a password protected truststore JKS file. For Event Training Server, the generate-keystores.sh script is located in the scripts directory.

As long as the root CA is included, any truststore can be used. The truststore created by the provided script does not have to be used.

Enable and disable SSL support

Note

These instructions assume you have certificates for each of the servers. You will need the location and passwords for the keystore and the truststore for each server.

Adaptation Studio includes scripts to enable and disable SSL support between the servers. The scripts are found in the following directories:

/basis/rs/rs-docker/basis/rts/rts-docker/basis/ras/scripts/basis/ets/ets-docker/basis/coref/scripts

Enable SSL

Install and test the complete Adaptation Studio installation before enabling SSL. This will verify that everything is properly installed.

For each component:

Warning

You must shut down all the services before enabling SSL between them.

If you receive an error when restarting the services: "Cannot start service servicename: error while creating mount source path", the services were not shut down before enabling SSL.

To continue, restart Docker:

sudo systemctl restart docker

then restart the services.

Once the installation has been verified, shut down the services.

Execute the script from the directory containing scripts. For each product, you will be prompted for the location and passwords for the keystore and truststore.

To run the headless version of the scripts, use the

--headlessflag. The.propertiesfiles are in the same directories as the scripts.Use the

--dry-runflag to validate the properties file, print the settings, and exit without changing anything./basis/rs/scripts/enable-rs-ssl.sh/basis/rts/rts-docker/enable-rts-ssl.sh/basis/ras/scripts/enable-ras-ssl.sh/basis/ets/ets-docker/enable-ets-ssl.sh/basis/coref/scripts/enable-coref-ssl.sh

Start the services.

Disable SSL

Shut down the services.

Execute the script from the directory containing the docker-compose files.

To run the headless version of the scripts, use the

--headlessflag. The.propertiesfiles are in the same directories as the scripts.Use the

--dry-runflag to validate the properties file, print the settings, and exit without changing anything./basis/rs/rs-docker/disable-rs-ssl.sh/basis/rts/rts-docker/disable-rts-ssl.sh/basis/ras/scripts/disable-ras-ssl.sh/basis/ets/ets-docker/disable-ets-ssl.sh/basis/coref/scripts/disable-coref-ssl.sh

Start the services.

Analytics Server SSL scripts

Analytics Server is based on the Java-based OSGI with Jetty web server. To enable incoming SSL connections the server configuration must be updated to include the path to the truststore, keystore, and their respective passwords. Additionally, to enable outgoing SSL connections, e.g. Entity Training Server Decoder, custom code etc., the Java virtual machine settings must be updated to include the path to the truststore, keystore, and their respective passwords as well.

The enable-rs-ssl.sh script performs the following actions:

Prompts for the location and passwords of the truststore and keystore files.

Copies the truststore and keystore to the configuration directory so that the container has access to them.

Note

if you are re-enabling SSL and using truststores and keystores already present in the configuration directory you will see a warning message about the files being identical and not being copied. This message can safely be ignored.

Copies

ssl-conf-template.confto the configuration directory and renames it tossl-conf.conf.ssl-conf.confwill contain parameters to the JVM settings for Analytics Server and will hold the names and passwords of the truststore and keystore.On startup, if the Analytics Server container detects the presence of the

ssl-conf.conffile in the config directory and the environment variable ENABLE_SSL is set to 1 (set in the .envfile), then the configuration file will be included in thewrapper.conffile for RS. Thewrapper.conffile is responsible for setting up the runtime environment for Analytics Server.Sets ENABLE_SSL to 1 in

.env.Adds/uncomments the keystore and truststore file names and passwords in the file

org.apache.cxf.http.jetty-main.cfgin the config directory.Sets the URL scheme to https in the file

com.basistech.ws.cxf.cfgin the config directory.Sets the scheme of the RTS_URL to https in the

.envfile.

The disable-rs-ssl.sh script reverses the actions above:

Note

This script does not delete the truststore or keystore from the configuration directory.

Sets ENABLE_SSL to 0 in

.env.Comments out the keystore and trustore file names and passwords in the file

org.apache.cxf.http.jetty-main.cfgin the config directory.Sets the URL scheme to http in the file

com.basistech.ws.cxf.cfgin the config directory.Sets the scheme of the RTS_URL to http in the

.envfile.

Enabling SSL in Analytics Server

A script to create a trust and/or keystore for Analytics Server can be found in both the Entity Training Server and Event Training Server directories. The scripts are identical.

<RTS_INSTALL>/scripts/generate-keystores.sh<ETS_INSTALL>/scripts/generate-keystores.sh.

Generating a keystore will require a certificate and key in PEM format.

Generating a truststore will require a Root Certificate Authority (Root CA) certificate in PEM format.

Note

The Root Certificate Authority can also be added to the truststore used system-wide by Java. If this option is used, then the trust store does not need to be explicitly set in the steps below. Typically, the global certificate authority certificate truststore is in <JAVA_INSTALL>/lib/security/cacerts with the default password of changeit.

SSL over inbound Analytics Server connections

Edit the keystore and truststore file properties and passwords in launcher/config/jetty-ssl-config.xml.

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:http="http://cxf.apache.org/transports/http/configuration"

xmlns:httpj="http://cxf.apache.org/transports/http-jetty/configuration"

xmlns:sec="http://cxf.apache.org/configuration/security"

xsi:schemaLocation="

http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd

http://cxf.apache.org/transports/http/configuration http://cxf.apache.org/schemas/configuration/http-conf.xsd

http://cxf.apache.org/transports/http-jetty/configuration http://cxf.apache.org/schemas/configuration/http-jetty.xsd

http://cxf.apache.org/configuration/security http://cxf.apache.org/schemas/configuration/security.xsd">

<httpj:engine-factory id="rosette-server-engine-config">

<httpj:engine port="#{ systemProperties['rosapi.port'] }">

<httpj:tlsServerParameters>

<sec:clientAuthentication required="false" />

<sec:keyManagers keyPassword="[key-pass]">

<sec:keyStore type="JKS" password="[keystore-pass]"

file="path/to/keystore.jks"/>

</sec:keyManagers>

<sec:trustManagers>

<sec:keyStore type="JKS" password="[truststore-pass]"

file="path/to/truststore.jks"/>

</sec:trustManagers>

</httpj:tlsServerParameters>

</httpj:engine>

</httpj:engine-factory>

</beans>Change http to https in /launcher/config/com.basistech.ws.cxf.cfg.

urlBase=https://0.0.0.0:${rosapi.port}/restSSL over outbound Analytics Server connections

Create a file named

ssl-conf.conf. Edit the file, adding the following contents:#encoding=UTF-8 #Uncomment the line below to enable SSL debugging #-Djavax.net.debug=ssl -Djavax.net.ssl.keyStore=<full path to the Java keystore file (jks|pkcs12)> -Djavax.net.ssl.keyStorePassword=<KEY_STORE_PASSWORD> -Djavax.net.ssl.trustStore=<full path to the Java truststore file (jks|pkcs12)> -Djavax.net.ssl.trustStorePassword=<TRUST_STORE_PASSWORD>

Edit

<ROSETTE_SERVER_INSTALL>/server/conf/wrapper.conf. Add the following to the end of the file:wrapper.java.additional_file=<path to the ssl-conf.conf file>

for example:

wrapper.java.additional_file=/rosette/server/launcher/config/ssl-conf.conf

Entity Training Server SSL scripts

Entity Training Server is based on the Java-based Open Liberty web container. To enable incoming and outgoing SSL the server configuration must be updated to include the path to the truststore, keystore, and their respective passwords. Additionally the mongodal_config.yaml file (in the config directory) must be updated to set the useSsl flag to true, enabling SSL between Entity Training Server and the mongo instance running on Adaptation Studio.

The enable-rts-ssl.sh script performs the following actions:

Prompts for the location and passwords of the truststore and keystore files.

Copies the truststore and keystore to the configuration directory so that the container has access to them.

Note

if you are re-enabling SSL and using truststores and keystores already present in the configuration directory you will see a warning message about the files being identical and not being copied. This message can safely be ignored.

Copies a

server.template.xmlfile to the configuration directory and renames it toserver.ssl.xml.Replaces the values for the truststore and keystore file names and passwords in the

server.ssl.xmlfile.Updates the

docker-compose.ymlfile and enables mounting theserver.ssl.xmlfile asserver.xml.This updates the server’s configuration.Updates the

.envfile with the name of the SERVER_XML file (server.ssl.xml).Enables ssl in the

mongodal_config.yamlfile.

The disable-rts-ssl.sh script reverses the actions above:

Note

This script does not delete the truststore or keystore from the configuration directory.

Updates the

docker-compose.ymlfile and comments out the mounting of the SERVER_XML file.Updates the

.envfile and comments out the SERVER_XML filename.Deletes the

server.ssl.xmlfile from the configuration directory.

Adaptation Studio SSL scripts

Adaptation Studio is based on multiple technologies: python server, nginx reverse proxy, mongoDB server and React. As such, the configuration for Adaptation Studio will be different from a Java process. All components internal to Adaptation Studio use nginx for outgoing communication. Mongo is exposed to the host machine and can be protected by SSL. One of the primary differences between the Adaptation Studio scripts and Entity/Event Training Server scripts is that the Adaptation Studio scripts use PEM files rather than JKS files. Also, since Adaptation Studio is made of one incoming connection (mongo) and three outgoing connections (Entity Training Server, Event Training Server, Analytics Server) it is possible to selectively enable SSL for mongodb, Entity Training Server and Analytics Server.

The enable-ras-ssl.sh script performs the following actions:

Prompts for the locations of the certificate, key, and root CA PEM files.

Copies the PEM files to WEBSITE_HTTPS_CERT_DIR defined in the

.envfile.If incoming mongodb traffic is to use SSL:

The certificate and key are concatenated into a single file for use by mongo and stored in the WEBSITE_HTTPS_CERT_DIR. This file is named

<certificate name without file extension>-ras-cert-key.pem.The file

proxy-ssl-template.confis copied and renamedproxy-mongo-ssl.conf. This file will contain nginx SSL settings for the certificate and ca certificate to use when internal components communicate with mongodb.The file

mongo-ssl-template-docker-compose.override.ymlis copied and renameddocker-compose.override.yml. This file contains the alternative startup command for mongodb that includes parameters to only accept SSL traffic. Additionally it mounts theproxy-mongo-ssl.conffile so that the ras-proxy service can gain access to the SSL configuration file.

If the outgoing traffic to RS is to use SSL:

The file

proxy-ssl-template.confis copied and renamedproxy-rs-ssl.conf. This file will contain nginx SSL settings for the certificate and ca certificate to use when internal components communicate with Analytics Server.The

nginx-template.conffile is updated to uncomment the inclusion of theproxy-rs-ssl.conffile.The

docker-compose.ymlfile is updated to mount theproxy-rs-ssl.conffile so that nginx can load it.The scheme for ROSETTE_URL is changed to https in

.env

If the outgoing traffic to Entity Training Server is to use SSL:

The file

proxy-ssl-template.confis copied and renamedproxy-rts-ssl.conf. This file will contain nginx SSL settings for the certificate and ca certificate to use when internal components communicate with Analytics Server.The

nginx-template.conffile is updated to uncomment the inclusion of theproxy-rts-ssl.conffile.The

docker-compose.ymlfile is updated to mount theproxy-rts-ssl.conffile so that nginx can load it.Changes the scheme of RTS_URL to https in the

.envfile.

The disable-ras-ssl.sh script reverses the actions above:

Note

This script does not delete the PEM files from the WEBSITE_HTTPS_CERT_DIR directory.

If SSL is to be disabled for incoming mongodb traffic:

Comments out the inclusion of the

proxy-mongo-ssl.conffrom thenginx-template.conffile.Deletes the

proxy-mongo-ssl.conffile.Backs up then deletes the

proxy-mongo-ssl.conffile.

If SSL is to be disabled for outgoing Analytics Server traffic:

Deletes the

proxy-rs-ssl.conffile.Comments out the inclusion of the

proxy-rs-ssl.conffrom thenginx-template.conffile.Comments out the mount of the

proxy-rs-ssl.conffrom thedocker-compose.ymlfile.

If SSL is to be disabled for outgoing Entity Training Server traffic:

Deletes the

proxy-rts-ssl.conffile.Comments out the inclusion of the

proxy-rts-ssl.conffrom thenginx-template.conffile.Comments out the mount of the

proxy-rts-ssl.conffrom thedocker-compose.ymlfile.Changes the scheme of RTS_URL to http in the

.envfile.

You can enable and disable the SSL connection between the browser and Adaptation Studio.

To disable SSL for incoming browser connections to the Studio, use the

disable-browser-ras-ssl.shscript.To enable SSL for incoming browser connections to the Studio, use the

enable-browser-ras-ssl.shscript. You will need a certificate and a key in PEM format.

Event Training Server SSL scripts

Event Training Server requires a certificate and key in PEM file format, in addition to a root certificate in PEM format.

Training mode also requires a keystore and truststore in JKS format.

./scripts/generate-keystores.shwill create the JKS files from the PEM files.

The enable-ets-ssl.sh script performs the following actions:

Prompts for the location and passwords of the truststore and keystore files and attempts to validate them.

Prompts whether the files should be copied to the

./certsdirectory (recommended but not required).Updates

./ets-docker/.env, setting the following:Sets

NGINX_CONF_FILEtonginx-ssl.conf.Sets

NGINX_CERT_PEM_FILE,NGINX_KEY_PEM_FILEandNGINX_TRUSTED_PEM_FILEto the respective PEM files.Sets

ETS_KEYSTORE_PW,ETS_TRUSTSTORE_PW,ETS_KEYSTORE_FILEandETS_TRUSTSTORE_FILE(if in training mode).Sets

ENABLE_OUTGOING_SSLto true (if in training mode).

The disable-ets-ssl.sh script performs the following actions:

Updates

./ets-docker/.envsetting the following:Sets

NGINX_CONF_FILEtonginx-not-ssl.conf.Sets

NGINX_CERT_PEM_FILE,NGINX_KEY_PEM_FILEandNGINX_TRUSTED_PEM_FILEto placeholder files in the./certsdirectory.Deletes

ETS_KEYSTORE_PWandETS_TRUSTSTORE_PW.Sets

ETS_KEYSTORE_FILEandETS_TRUSTSTORE_FILEto placeholder files in the./certsdirectory.Sets

ENABLE_OUTGOING_SSLto false.

Indoc Coref Server SSL scripts